Search Exchange

Search All Sites

Nagios Live Webinars

Let our experts show you how Nagios can help your organization.Login

Directory Tree

check_hadoop_namenode.pl (Advanced Nagios Plugins Collection)

Compatible With

- Nagios 1.x

- Nagios 2.x

- Nagios 3.x

- Nagios XI

Owner

Download URL

Hits

30557

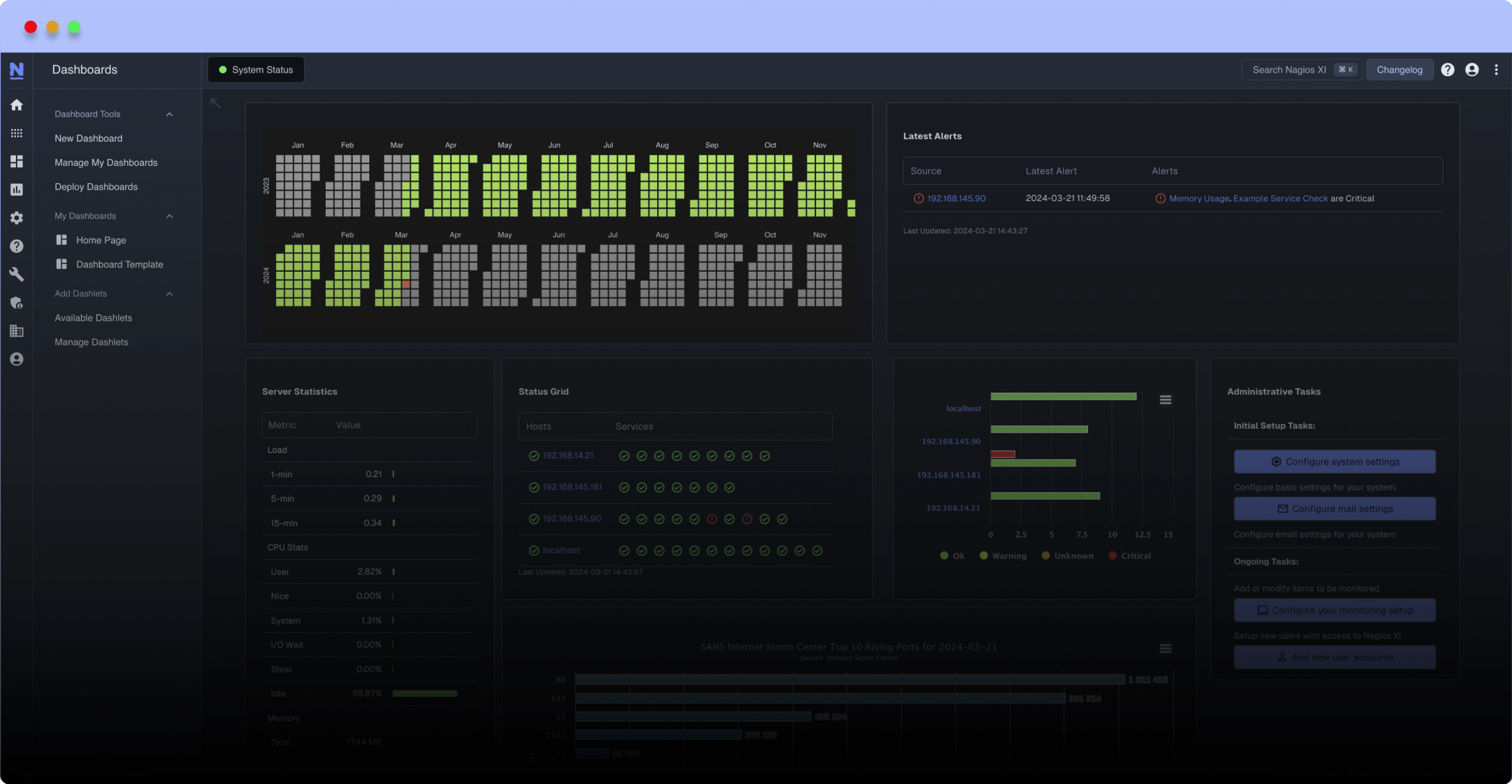

Meet The New Nagios Core Services Platform

Built on over 25 years of monitoring experience, the Nagios Core Services Platform provides insightful monitoring dashboards, time-saving monitoring wizards, and unmatched ease of use. Use it for free indefinitely.

Monitoring Made Magically Better

- Nagios Core on Overdrive

- Powerful Monitoring Dashboards

- Time-Saving Configuration Wizards

- Open Source Powered Monitoring On Steroids

- And So Much More!

Part of the Advanced Nagios Plugins Collection, download it here:

https://github.com/harisekhon/nagios-plugins

./check_hadoop_namenode.pl --help

Nagios Plugin to run various checks against the Hadoop HDFS Cluster via the Namenode JSP pages

This is an alternate rewrite of the functionality from my previous check_hadoop_dfs.pl plugin

using the Namenode JSP interface instead of the hadoop dfsadmin -report output

Recommend to use the original check_hadoop_dfs.pl plugin it's better tested and has better/tighter output validation than this one, but this one is useful for the following reasons:

1. you can check your NameNode remotely via JSP without having to adjust the NameNode setup to install the check_hadoop_dfs.pl plugin

2. it can check Namenode Heap Usage

Caveats:

1. Cannot currently detect corrupt or under-replicated blocks since JSP doesn't offer this information

2. There are no byte counters, so we can only use the human summary and multiply out, and being a multiplier of a summary figure it's marginally less accurate

Note: This was created for Apache Hadoop 0.20.2, r911707 and updated for CDH 4.3 (2.0.0-cdh4.3.0). If JSP output changes across versions, this plugin will need to be updated to parse the changes

usage: check_hadoop_namenode.pl [ options ]

-H --host Namenode to connect to

-P --port Namenode port to connect to (defaults to 50070)

-s --hdfs-space Checks % HDFS Space used against given warning/critical thresholds

-r --replication Checks replication state: under replicated blocks, blocks with corrupt replicas, missing blocks. Warning/critical thresholds apply to under replicated blocks. Corrupt replicas and missing blocks if any raise critical since this can result in data loss

-b --balance Checks Balance of HDFS Space used % across datanodes is within thresholds. Lists the nodes out of balance in verbose mode

-m --node-count Checks the number of available datanodes against the given warning/critical thresholds as the lower limits (inclusive). Any dead datanodes raises warning

-n --node-list List of datanodes to expect are available on namenode (non-switch args are appended to this list for convenience). Warning/Critical thresholds default to zero if not specified

--heap-usage Check Namenode Heap % Used. Optional % thresholds may be supplied for warning and/or critical

-w --warning Warning threshold or ran:ge (inclusive)

-c --critical Critical threshold or ran:ge (inclusive)

-h --help Print description and usage options

-t --timeout Timeout in secs (default: 10)

-v --verbose Verbose mode

-V --version Print version and exit

https://github.com/harisekhon/nagios-plugins

./check_hadoop_namenode.pl --help

Nagios Plugin to run various checks against the Hadoop HDFS Cluster via the Namenode JSP pages

This is an alternate rewrite of the functionality from my previous check_hadoop_dfs.pl plugin

using the Namenode JSP interface instead of the hadoop dfsadmin -report output

Recommend to use the original check_hadoop_dfs.pl plugin it's better tested and has better/tighter output validation than this one, but this one is useful for the following reasons:

1. you can check your NameNode remotely via JSP without having to adjust the NameNode setup to install the check_hadoop_dfs.pl plugin

2. it can check Namenode Heap Usage

Caveats:

1. Cannot currently detect corrupt or under-replicated blocks since JSP doesn't offer this information

2. There are no byte counters, so we can only use the human summary and multiply out, and being a multiplier of a summary figure it's marginally less accurate

Note: This was created for Apache Hadoop 0.20.2, r911707 and updated for CDH 4.3 (2.0.0-cdh4.3.0). If JSP output changes across versions, this plugin will need to be updated to parse the changes

usage: check_hadoop_namenode.pl [ options ]

-H --host Namenode to connect to

-P --port Namenode port to connect to (defaults to 50070)

-s --hdfs-space Checks % HDFS Space used against given warning/critical thresholds

-r --replication Checks replication state: under replicated blocks, blocks with corrupt replicas, missing blocks. Warning/critical thresholds apply to under replicated blocks. Corrupt replicas and missing blocks if any raise critical since this can result in data loss

-b --balance Checks Balance of HDFS Space used % across datanodes is within thresholds. Lists the nodes out of balance in verbose mode

-m --node-count Checks the number of available datanodes against the given warning/critical thresholds as the lower limits (inclusive). Any dead datanodes raises warning

-n --node-list List of datanodes to expect are available on namenode (non-switch args are appended to this list for convenience). Warning/Critical thresholds default to zero if not specified

--heap-usage Check Namenode Heap % Used. Optional % thresholds may be supplied for warning and/or critical

-w --warning Warning threshold or ran:ge (inclusive)

-c --critical Critical threshold or ran:ge (inclusive)

-h --help Print description and usage options

-t --timeout Timeout in secs (default: 10)

-v --verbose Verbose mode

-V --version Print version and exit

Reviews (0)

Be the first to review this listing!

New Listings

New Listings