Search Exchange

Search All Sites

Nagios Live Webinars

Let our experts show you how Nagios can help your organization.Login

Directory Tree

check_url

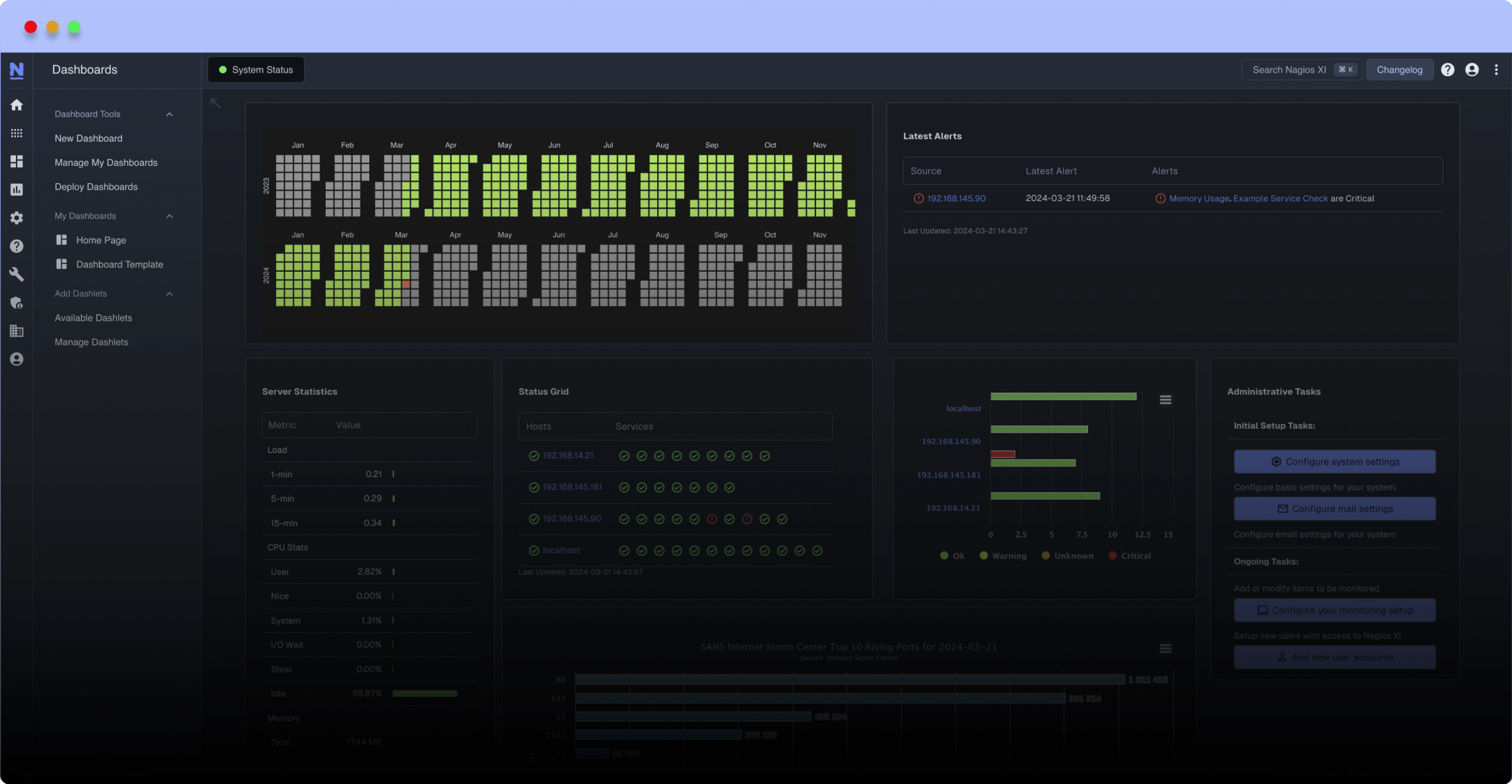

Meet The New Nagios Core Services Platform

Built on over 25 years of monitoring experience, the Nagios Core Services Platform provides insightful monitoring dashboards, time-saving monitoring wizards, and unmatched ease of use. Use it for free indefinitely.

Monitoring Made Magically Better

- Nagios Core on Overdrive

- Powerful Monitoring Dashboards

- Time-Saving Configuration Wizards

- Open Source Powered Monitoring On Steroids

- And So Much More!

A perl script to check availibility of http URLs.

Returns HTTP error code in Status information.

Unlike check_http, this script can be used to test mission critical URLs like http://some.com/?status/other.

Works with both https and http.

Returns HTTP error code in Status information.

Unlike check_http, this script can be used to test mission critical URLs like http://some.com/?status/other.

Works with both https and http.

Reviews (1)

bysaf42, January 7, 2016

1 of 1 people found this review helpful

Added PID number for temporary files, that the script can run in parallel with multiple processes.

Added a check if one arguments is defined at script startup.

Note: The script requires wget to be installed at /usr/bin/.

So I updated the source code, here is my version:

#!/usr/bin/perl

# (C) Unknown author at https://exchange.nagios.org/directory/Plugins/Websites%2C-Forms-and-Transactions/check_url/details

#

# Changes:

# 2016-01-07: Stephan Ferraro

# Added PID number for temporary files, that the script can run in parallel with multiple processes.

# Added a check if one arguments is defined at script startup. -- Stephan Ferraro

use strict;

if ($#ARGV == -1)

{

print STDERR "usage: check_url.pl URL\n";

exit 1;

}

my $wget = '/usr/bin/wget --output-document=/tmp/tmp_'.$$.'.html --no-check-certificate -S';

my ($url) = @ARGV;

my @OK = ("200");

my @WARN = ("400", "401", "403", "404", "408");

my @CRITICAL = ("500", "501", "502", "503", "504");

my $TIMEOUT = 20;

my %ERRORS = ('UNKNOWN' , '-1',

'OK' , '0',

'WARNING', '1',

'CRITICAL', '2');

my $state = "UNKNOWN";

my $answer = "";

$SIG{'ALRM'} = sub {

print ("ERROR: check_url Time-Out $TIMEOUT s \n");

exit $ERRORS{"UNKNOWN"};

};

alarm($TIMEOUT);

system ("$wget $url 2>/tmp/tmp_".$$.".res1");

if (! open STAT1, "/tmp/tmp_".$$.".res1") {

print ("$state: $wget returns no result!");

exit $ERRORS{$state};

}

close STAT1;

`cat /tmp/tmp_$$.res1|grep 'HTTP/1'|tail -n 1 >/tmp/tmp_$$.res`;

open (STAT, "/tmp/tmp_".$$.".res");

my @lines = ;

close STAT;

if ($lines[0]=~/HTTP\/1\.\d+ (\d+)( .*)/) {

my $errcode = $1;

my $errmesg = $2;

$answer = $answer . "$errcode $errmesg";

if ('1' eq &chkerrwarn($errcode) ) {

$state = 'WARNING';

} elsif ('2' eq &chkerrcritical($errcode)) {

$state = 'CRITICAL';

} elsif ('0' eq &chkerrok($errcode)) {

$state = 'OK';

}

}

sub chkerrcritical {

my $err = $1;

foreach (@CRITICAL){

if ($_ eq $err) {

return 2;

}

}

return -1;

}

sub chkerrwarn {

my $err = $1;

foreach (@WARN){

if ($_ eq $err) {

return 1;

}

}

return -1;

}

sub chkerrok {

my $err = $1;

foreach (@OK){

if ($_ eq $err) {

return 0;

}

}

return -1;

}

`rm /tmp/tmp_$$.html /tmp/tmp_$$.res /tmp/tmp_$$.res1`;

print ("$state: $answer\n");

exit $ERRORS{$state};

Added a check if one arguments is defined at script startup.

Note: The script requires wget to be installed at /usr/bin/.

So I updated the source code, here is my version:

#!/usr/bin/perl

# (C) Unknown author at https://exchange.nagios.org/directory/Plugins/Websites%2C-Forms-and-Transactions/check_url/details

#

# Changes:

# 2016-01-07: Stephan Ferraro

# Added PID number for temporary files, that the script can run in parallel with multiple processes.

# Added a check if one arguments is defined at script startup. -- Stephan Ferraro

use strict;

if ($#ARGV == -1)

{

print STDERR "usage: check_url.pl URL\n";

exit 1;

}

my $wget = '/usr/bin/wget --output-document=/tmp/tmp_'.$$.'.html --no-check-certificate -S';

my ($url) = @ARGV;

my @OK = ("200");

my @WARN = ("400", "401", "403", "404", "408");

my @CRITICAL = ("500", "501", "502", "503", "504");

my $TIMEOUT = 20;

my %ERRORS = ('UNKNOWN' , '-1',

'OK' , '0',

'WARNING', '1',

'CRITICAL', '2');

my $state = "UNKNOWN";

my $answer = "";

$SIG{'ALRM'} = sub {

print ("ERROR: check_url Time-Out $TIMEOUT s \n");

exit $ERRORS{"UNKNOWN"};

};

alarm($TIMEOUT);

system ("$wget $url 2>/tmp/tmp_".$$.".res1");

if (! open STAT1, "/tmp/tmp_".$$.".res1") {

print ("$state: $wget returns no result!");

exit $ERRORS{$state};

}

close STAT1;

`cat /tmp/tmp_$$.res1|grep 'HTTP/1'|tail -n 1 >/tmp/tmp_$$.res`;

open (STAT, "/tmp/tmp_".$$.".res");

my @lines = ;

close STAT;

if ($lines[0]=~/HTTP\/1\.\d+ (\d+)( .*)/) {

my $errcode = $1;

my $errmesg = $2;

$answer = $answer . "$errcode $errmesg";

if ('1' eq &chkerrwarn($errcode) ) {

$state = 'WARNING';

} elsif ('2' eq &chkerrcritical($errcode)) {

$state = 'CRITICAL';

} elsif ('0' eq &chkerrok($errcode)) {

$state = 'OK';

}

}

sub chkerrcritical {

my $err = $1;

foreach (@CRITICAL){

if ($_ eq $err) {

return 2;

}

}

return -1;

}

sub chkerrwarn {

my $err = $1;

foreach (@WARN){

if ($_ eq $err) {

return 1;

}

}

return -1;

}

sub chkerrok {

my $err = $1;

foreach (@OK){

if ($_ eq $err) {

return 0;

}

}

return -1;

}

`rm /tmp/tmp_$$.html /tmp/tmp_$$.res /tmp/tmp_$$.res1`;

print ("$state: $answer\n");

exit $ERRORS{$state};

New Listings

New Listings